Best Questions and Answers to the “Terrifying Netflix Concurrency Bug” Post

Why couldn't we roll back? Weren't we concerned about costs? Why is operating Netflix so complicated? Plus many others.

Intro

Hello friends. A few months ago, I wrote a post about a terrifying concurrency bug we experienced during my time at Netflix.

More specifically, it was about a quick fix we implemented to help us survive the weekend.

To summarize:

We hit a concurrency bug in code owned by another team that was running in our VM.

It was Friday and the owning team couldn’t have a proper fix ready until Monday at the earliest, and we weren’t able to roll back easily.

The bug caused an infinite loop on whatever CPU it ran on, so we were losing a few dozen CPUs per hour.

As a fix, we scaled our cluster to max capacity, shut off auto-scaling, and quasi-randomly terminated a few instances per hour in order to regain fresh capacity.

It worked wonderfully, and we all have a relaxing weekend instead of performing grueling operational work.

The post was recently picked up by Hacker News and a few other aggregators which brought in an unexpected but appreciated surge of traffic.

There were some interesting questions in the comment threads, so I thought I’d share them here, along with my responses, which were hidden by Hacker News because I have no karma.

References to threads:

I’ve paraphrased some of the questions to make them clearer. Let’s get into it.

Weren’t you concerned about running out of instances in AWS?

No — we had reservations for most of our heavily used instance types.

Wasn’t this a very expensive bandaid?

Why wasn’t cost a priority?

We were already paying for reservations, so I don’t believe this increased our costs at all.

But even if this approach had doubled our costs over the weekend, I think we'd still have made the same choice.

I’d summarize our priorities like this:

Building a valuable product

Maximizing the stability of the system

Maximizing the sanity of our engineers

Minimizing Costs

So it wasn’t that we didn’t care about cost at all. We just cared more about other things.

This is a bit odd coming from the company of Chaos Engineering.

Has the Chaos Monkey been abandoned at Netflix?

Why not ramp up the Chaos Monkey instead of pursuing this solution?

There’s something about the Chaos Monkey that often gets lost — it was a cool, funny, and useful idea, but not particularly impactful when running a stateless cluster of 1000+ nodes.

On any given day, we were already auto-terminating a non-zero number of “bad” nodes for reasons we didn’t fully understand — maybe it was bad hardware, a noisy neighbor, or a handful of other things.

Whatever the reason, when we found an outlier node, we terminated it and hoped for a better replacement.

So, the Chaos Monkey wasn’t something we actively used to resolve these types of issues. It was more of a background process that discovered resiliency-related issues we weren’t aware of, such as clusters running with too few nodes or clients addressing servers directly instead of using logical DNS names or load balancers, among other things.

Were you managing instances with Kubernetes, or did you write a script to manage the auto-terminating of the instances?

We weren’t using Kubernetes, and we didn’t write a script — we used an internal tool that allowed us to query our cluster’s metrics and terminate any matching instances, up to a maximum.

To my memory, we configured the tool to do something like this: terminate any instances with CPU over 70%, limited to 5 every 15 minutes.

Note that this didn’t target only the affected instances, as most instances still met this criteria. But it at least assured us that we couldn’t terminate a new instance that was still warming up before accepting traffic.

Why was killing nodes quicker than restarting them?

Perhaps because of the business logic built into the Java application?

Not business logic. We used the tool I mentioned in the previous answer to terminate instances. It didn’t have an option to restart them instead.

To restart the node, we would likely have had to write a script that used the EC2 API directly.

To restart the process, we would likely have had to write a script to SSH into a random number of nodes and restart the Java webapp.

And whatever script we wrote, we would then have had to cron it somewhere. So using the internal metric-based tool was much easier.

How did you know that killing nodes was safe?

How was the system architected so that the requests weren't dropped altogether?

This is a great question that deserves its own post, but I’ll give an overview here.

Short answer: our software load balancing and service discovery libraries routed traffic away from nodes that died or performed poorly.

The cluster with the bug was the API backend, which was one of the origin services fronted by Zuul, our Edge proxy.

Zuul used our software load balancing library named Ribbon to send requests to origins. If Ribbon received a timeout or connection error from a given node, it would send a retry to another one.

Zuul configured Ribbon to send requests to logical services, not physical instances. Ribbon used an internal discovery service named Eureka to maintain a registry of service-to-node mappings. Health checks were sent from nodes to Eureka at a configured cadence, and if health checks stopped arriving from a given node, Ribbon would stop sending it traffic.

I’m not up on the latest technologies regarding service meshes, but I suspect they provide a similar function to what I’ve described here.

Instead of randomly killing nodes, why not just periodically force a deployment?

This would have been overkill. Our cluster had 1000+ nodes. A deployment would have replaced every node, while we only needed to replace a few dozen nodes per hour.

The over-the-top language in the title and throughout caused me to bounce off pretty hard.

“Terrifying”? “Carnage”? Really?

Yes, really. I try to describe not just what happened, but how it felt in the moment. Before my time at Netflix, I had never worked at such a large scale, in an environment as chaotic as mid-2010s AWS, on a product that people cared about.

Large Netflix outages made the front page of the news. At the time, I worked on the Edge team, widely considered “the front door to Netflix.” We were involved in the majority of production incidents if only to verify that the problem wasn’t caused by us.

The worst outages felt like the closest thing to carnage that you can experience in a cubicle. So, to me, the language is not over the top. It was our reality.

If you enjoy this post, check out the early release of my new book:

It’s full of war stories about designing complex, distributed, socio-technical systems, inspired by real-world experiences.

🔥 Black Friday sale — 10% off until 12/3/2024

Gumroad discount code: L4AOG4V

Leanpub discount link: https://leanpub.com/pushtoprodordietrying/c/sxHNDILX6uc1

🧠 Discount limited to 100 sales — jump in before they’re gone

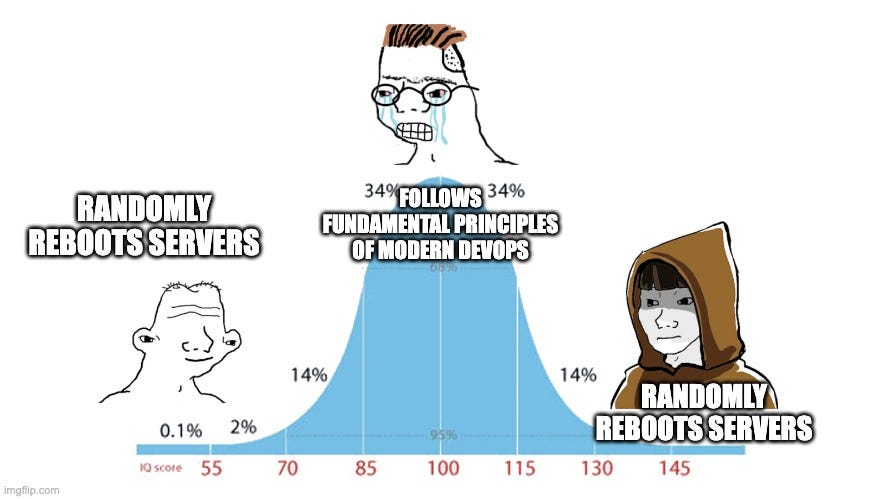

It's a fundamental principle of modern DevOps practice that rollbacks should be quick and easy, done immediately when you notice a production regression, and ideally automated.

And at Netflix's scale, one would have wanted this rollout to be done in waves to minimize risk.

Did the team investigate later why you couldn't roll back, and address it?

There was a strong response to this that I liked:

“Then DevOps principles are in conflict with reality.”

That aside, there are multiple questions here, so I’ll attempt to deconstruct them.

Why couldn’t you roll back? I can’t recall the precise details, but it wasn’t systemic. It was more so that one of our clients had rolled out a feature that depended on functionality in our new build, and unraveling all of that through cascading multi-system rollbacks was determined to be less desirable than our hackish self-healing solution.

Why didn’t you have automation to roll back upon immediately noticing a regression? We weren’t comfortable with fully automated rollbacks or deployments. They were automated in that you could click a button to do it (or, one button per AWS region), but because of our location at the edge of the stack, we wanted human eyes and hands involved and ready for action if problems occurred.

Why didn’t you notice the regression sooner? This was a nondeterministic bug that slowly consumed CPUs across our cluster. The problem manifested slowly. So the typical methods of catching bugs or regressions weren’t effective in catching it.

Why didn’t you roll out the new build (the one with the bug) in waves? Our rollouts weren’t in “waves,” but they were gradual — we’d run one or more canary instances with the new build through at least one evening of peak hours in each AWS region. We’d launch the same number of “control” instances, then compare the metrics — similar to how an AB test is evaluated. But again, that wasn’t enough time for us to notice the bug.

Why does Netflix pursue so much cost and complexity just to serve video files?

Almost all of the complexity seems to stem from optimizing engagement.

This post never once refers to the actual business purpose of the cluster with the bug.

Fascinating question. Short answer: As a subscription-based business, you have two choices: retain users or die.

The use of “optimizing engagement” in the question feels pejorative, and I can empathize with that based on how engagement farming negatively impacts products like YouTube and Twitter, for example.

But I would put Netflix in a different category, since they provide long form content that doesn’t benefit from immediate and instinctual clicks. For Netflix, optimizing engagement means maximizing the time people spend watching stuff, which ideally results in them maintaining their subscriptions.

Regarding “business purpose”, the cluster with the bug was the API backend, which you could think of as a functional gateway between devices and most non-playback related functionality, such as:

Authentication

Fetching personalized content

Search

And so on. But there are so many other nuanced aspects to creating a solid video product:

Language support for audio and subtitles

Performant UIs on all supported devices

Making stop-and-resume work effectively and consistently

Why does this seem to always work on Netflix, but inconsistently for other services?

Making search work in a sane fashion

Why do I have to sometimes type the exact show name for it to show up on Hulu?

Every aspect of personalization

Collecting useful data

Building effective models

Surfacing the results of those models everywhere possible (browse, search, vertical and horizontal sorting and placement)

Balancing AI/ML with heuristics and, for lack of a better term, “logic”

General usability and flow of the product – going from “your stuff”, to general stuff, to search, to a specific show page, to playing, to paused, and so on.

In my opinion, most video products, aside from YouTube and Netflix, are very cumbersome to use.

The core question seems to be, “Why doesn’t Netflix just focus on serving video files?”

And to reiterate, the answer is: because that would be a terrible business strategy.

Conclusion

Engineering principles such as “rollbacks should be easy, immediate, and automated”, and “let’s just focus on serving video files” can be powerful motivators for great work.

However, the companies that we work for often have different principles, such as “revenue needs to increase by 5% every year.”

As engineers, our job is to artfully balance the tension between these principles with courage and empathy. This is the work, and decision making is the primary skill.

In the original story, our decision making skills led to rebooting servers every 15 minutes to help us survive a dangerous bug.

I’ll concede that it was a surprising decision, but it was also a thoughtful and well-informed one. I hope my answers to these questions added some context to the story.

Thanks for reading and have a wonderful day. I think I’ll be doing at least one more post before the holidays, but if not, I hope you have a good one.

Thank you for the follow up, I enjoyed it almost as much as the original post.