Making an AB Test Allocator 20x Faster Using Non-blocking IO

Many moons ago, I inherited a cross-company AB test and had to figure out how to allocate it effectively.

Intro

Hello friends. Today I’m going to discuss a horrendously hacked-together AB test allocator I built to enable a cross-company experiment a few years ago.

NOTE: Check out the other posts from the Concurrency War Stories series here.

Background

A few years back, I worked on a “Personalization” team at a company I’ll anonymize as CableCathedral. Our team did a bunch of things related to improving content discovery for their video products.

After a reorg, I inherited a project that was a cross-company partnership between CableCathedral and our favorite fictional video streaming company Neurafilm to AB test the inclusion of recently watched Neurafilm titles in CableCathedral’s video experiences.

Integration Strategy

Since it’s unlikely that any two companies extensively share common data and infrastructure, a “cross-company experiment” is really two experiments masquerading as one.

So, there were two sides to the experiment, one at each company, and both had to allocate devices.

Before I joined the project, the companies had agreed on this integration strategy:

Neurafilm would select devices to allocate into their experiment and upload the device IDs into a shared S3 bucket.

CableCathedral would then allocate the device IDs into our experiment.

The allocations would be performed in batches of roughly 100k devices at a time. We weren’t sure how many batches there would be – we planned to keep allocating until we observed the desired amount of signal.

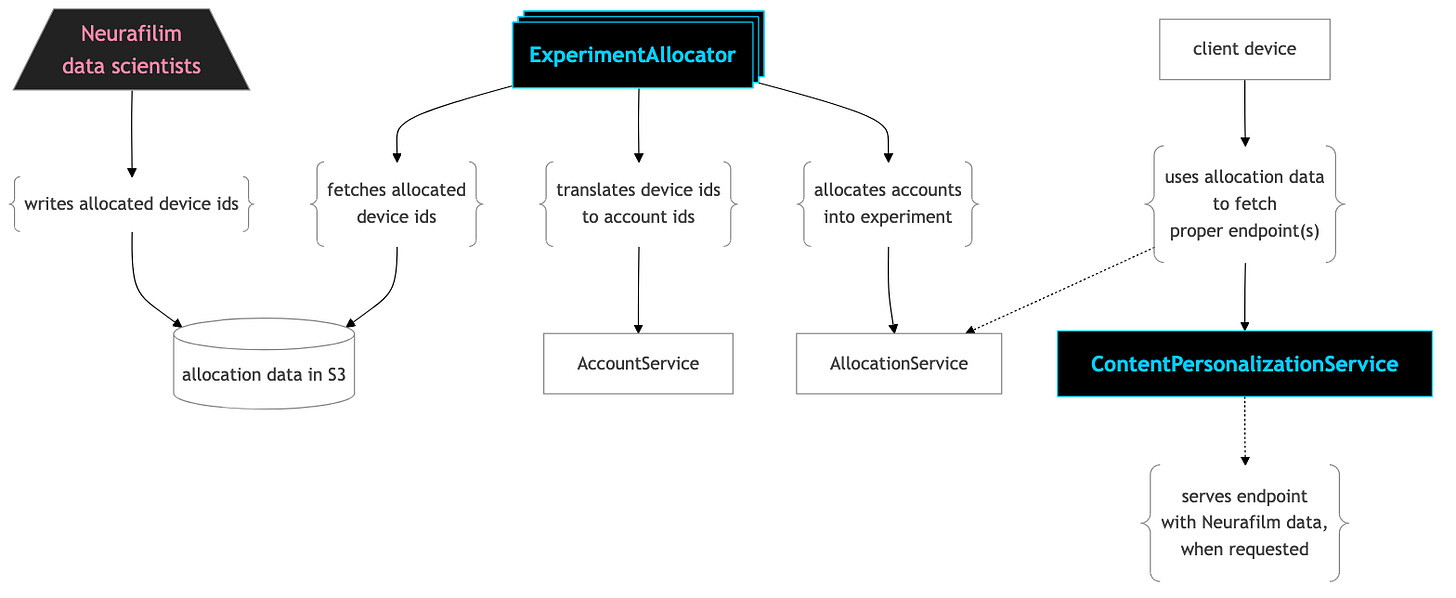

Here’s a diagram of the moving parts:

The black boxes with blue letters were the pieces my team owned.

Reiterating in textual form:

Neurafilm identified devices to allocate and wrote them into a shared S3 bucket

The yet-to-be-built ExperimentAllocator would…

Fetch the allocation data from S3

Invoke the AccountService to translate Neurafilm device IDs to CableCathedral account IDs

Write allocated account IDs into the AllocationService

Client devices used the allocation data to pass an experiment flag into the endpoints they used to fetch content

ContentPersonalizationService served personalized content, including Neurafilm data for the “Continue Watching” endpoint, if the flag was presented

I’m normalizing a lot of things here to decrease the insanity of the diagram. But these are the parts that mattered most to the story.

Environmental Design

One of my colleagues started the ExperimentAllocator as a Spark job. I took it over as he was swamped with other tasks.

My first move was to rewrite it as a standalone Python script. Why? Because what I wanted to optimize for here was flexibility.

I had a lot of uncertainty about our allocation workflow. For example, I didn’t know…

How many allocation batches we’d have.

What our failure rate would be when invoking the AccountService to translate device IDs to account IDs.

What our failure rate would be when writing to the AllocationService.

I had anecdotally heard that “sometimes it doesn’t allocate all the users you ask it to”, but I had no hard evidence

Because of this uncertainty, I envisioned a flow where I would:

Download batch files from S3 and allocate users

Find all users who weren’t successfully allocated and re-allocate them.

Here’s a visual:

This workflow was useful since allocations failed for several reasons:

Timeouts, sporadic server errors, or rate-limiting errors from the AccountService

Silent errors from the AllocationService

It was infinitely repeatable until all users were allocated. And since the error batches were notably smaller than the initial batches, I could re-allocate the error batches a lower throughput to decrease the volume of rate-limiting errors.

The more I thought about it, the more I realized that a simple collection of Python and shell scripts was a far superior solution to something more sophisticated like Spark running on AWS EMR.

Debugging in EMR was a nightmare – it often took me 5 minutes to even find the proper log file. I needed quick access to error logs, plus the ability to run the allocator on specific input files that I may generate by grepping errors from the output files of a previous run.

So I booted a random EC2 instance and got to work.

Functional But Slow

After a week or so of grinding, everything worked. My ExperimentAllocator could process the input files and invoke the appropriate services.

But there was a problem – the allocation process was too slow.

I was processing users in a single-process, single-threaded loop, so for a batch of 100k users, here’s an estimate of the total throughput and runtime:

If our per-user allocation time was 100ms, we’d finish allocating a batch in 3 hours, which was right on the edge of acceptable. Everything else was way too long.

Where did I get the 3-hour allocation time limit from? From nowhere – it was a self-imposed requirement. Due to the intricacies of how our devices picked up new configurations and the cumbersome nature of running a cross-company experiment, I wanted to ensure that we could allocate batches as quickly as possible.

I wasn’t confident our allocation time could consistently stay under 1 second for the majority of requests. So I needed better throughput.

I considered rewriting it in Java or Scala to use threads, but that seemed like a drag and would also slow down my development flow.

Enter Non-Blocking IO

A lightbulb clicked on – maybe I could try non-blocking IO in Python? It should, at least, allow me to send requests concurrently.

So that’s what I did, and after a furious few days of hacking, I had a ~500 line Python script using asyncio and aiohttp that was able to process slightly more than 200 users per second. This was a 20x improvement over my previous best-case scenario.

Let me repeat that, just to relive my moment of self-satisfaction: a 20x improvement in throughput.

I could now allocate 100k users in around 10 minutes instead of 3+ hours. Problem solved.

I could have gone multiprocess to push it further, but it wasn’t necessary.

My practical bottleneck was the throughput allowed to me by the aforementioned AccountService. The owning team allocated my access key a volume of concurrent requests that resulted in somewhere around 200 requests per second based on their latency profile.

I was able to limit the concurrency of my requests by limiting the number of Tasks I created using Python’s asyncio module. I calibrated the number until I was receiving a manageable number of HTTP 429 “rate limit exceeded” errors and then re-processed those requests in a subsequent iteration, as mentioned a few sections back.

A book full of war stories about designing complex, distributed, socio-technical systems:

Looking Back, Fully Monocled

Fast forward a few months, and we had run the experiment to both companies' satisfaction.

A few things jump out to me, in hindsight:

Don’t Automate Everything

We could have built an automated system to listen for allocation uploads in S3 and then trigger a job to start the allocation pipeline. But it would have been a poor choice.

Our environment was new and chaotic and required a lot of care and debugging, so having a human run a script was a better option to start. And since we only allocated around 10 batches, it wasn’t worth the effort to build automation just to shut it down in a few months.

I’d advocate for delaying automation as long as you can, assuming that you can maintain your sanity while doing things manually.

You can always automate something later. But once you automate it, it’s hard to go back to a purely manual system, and you often end up with a sub-optimal automated/manual hybrid.

All systems are automated/manual hybrids, although not necessarily sub-optimal. But that’s a topic for another post.

The Value of Product-Oriented Platform Teams

We weren’t an officially named “Platform” team, but I think we possessed something that product-oriented Platform teams often do: knowledge and awareness of many moving parts, from end to end, across one or more business surfaces.

I can easily imagine an alternate timeline in which a tiger team was thrown together to hack something into Prod to get this experiment moving. And maybe that would have worked. But I’m skeptical that it would have because of many nuances:

Authentication tokens that enabled our PersonalizationService to hit Neurafilm’s API

Response formats to enable transparent injection of experimental responses, so that clients didn’t have to “know” if they were in an experiment or not

Using the same Personalization endpoint to elegantly serve both control and treatment responses

The aforementioned AllocationService behavior that resulted in a low volume of silent errors

AccountService interface, latency profiles, authentication tokens, rate limits

Providing metrics to enable us to verify that the experiment was functional and well-performing from a system perspective

Ensuring data scientists had access to proper usage data to evaluate the user experience and overall experiment results

Everything is a Mess

This project was an absolute mess from a technical perspective, and it was even worse socially. I cut out many amusing anecdotes from this post that are better suited for another day and time.

But every system at every company is a mess, as we’re absorbing the complexity of real-world constraints regarding hardware, networks, business relationships, hiring practices, communication, and general software skills.

I was proud to work on this project, and I hope you found the story interesting. This is the last post in my Concurrency War Stories series, and I hope you enjoyed at least a few of them. I’ll be getting into some different topics soon. Have a wonderful day.