One Key Aspect of Optimizing Computational Throughput

When optimizing throughput, think carefully about how many CPUs you have when adding threads or processes.

Intro

NOTE: Check out the other posts from the Concurrency War Stories series here.

We’re often trying to maximize the throughput of our systems.

If you can do more stuff with fewer servers, you can either decrease your costs or maintain the same costs and do more things.

I was once asked an interview question about scaling a hypothetical Fibonacci service. The simplicity of the system allowed us to cut to the core of scaling problems.

No network problems – well, maybe some, once your request volume surpasses a system threshold.

No disk or memory problems – well, maybe some of those too, if we decide to start caching things. But that aside, the immediate problem was scaling a system that used the CPU to do very simple math.

So, for this post, let’s think about throughput in the same way – just a few simple CPUs, doing simple stuff. How much of that stuff can we do concurrently?

ANOTHER NOTE: This is a very JVM-centric post, but since JVM threads are also native processes, the concepts should apply to any stack that uses multiple processes for concurrency.

Here’s a link to the relevant part of my 2021 Concurrency War Stories talk:

When in Doubt, (Don’t) Add More Threads

The number of CPUs you have is the primary constraint on the number of things you can do concurrently.

However, in the JVM world, I cannot tell you the number of times I’ve experienced this situation:

Something is slow

You stare at config, or maybe some code

Then you stare out the window for awhile

Then someone says “let’s increase the thread pool size”

So you do it

And then observe no improvement

On occasion, the person who suggested increasing the thread pool size was me. But I was so young back then. Now I’ve evolved into a person who asks “How many CPUs do we have?”

A Thread’s Cruel Life

Here’s a copy of a slide from the video:

The intent was to illustrate all of the points-in-time where your code will stop executing on the CPU because it blocks waiting on IO:

Reading data from client request

Writing upstream request

Reading data from upstream response

Writing client response

The not-on-CPU part is true even in non-blocking IO, but the underlying mechanism differs. So imagine N threads fighting for the CPU at any of these points. But they can contend for the CPU at any point, not just IO boundaries, so as your thread-to-CPU ratio grows higher, you’ll see your code interrupted more often, even when just doing normal non-IO processing.

My point here was to encourage you to think less about thread count and more about CPU count.

Let’s Run an Experiment

Here at Supreme Informatics we pride ourselves on presenting hard data to persuade, not just words and theory. So let’s run an experiment with these goals in mind:

Verify that CPU count is more impactful on system throughput than thread count

Suggest an ideal Thread-to-CPU ratio

I wrote a performance test in Java which did this:

Creates an ExecutorService with $threadCount threads

Creates $threadCount Runnables and submits them to the ExecutorService

The Runnables loop infinitely until interrupted, sleeping for $sleepMillis milliseconds, incrementing a counter on each loop.

After $runtimeSeconds seconds, shutdown ExecutorService

Test Environment

Java 21, on this machine:

Test Design and Results

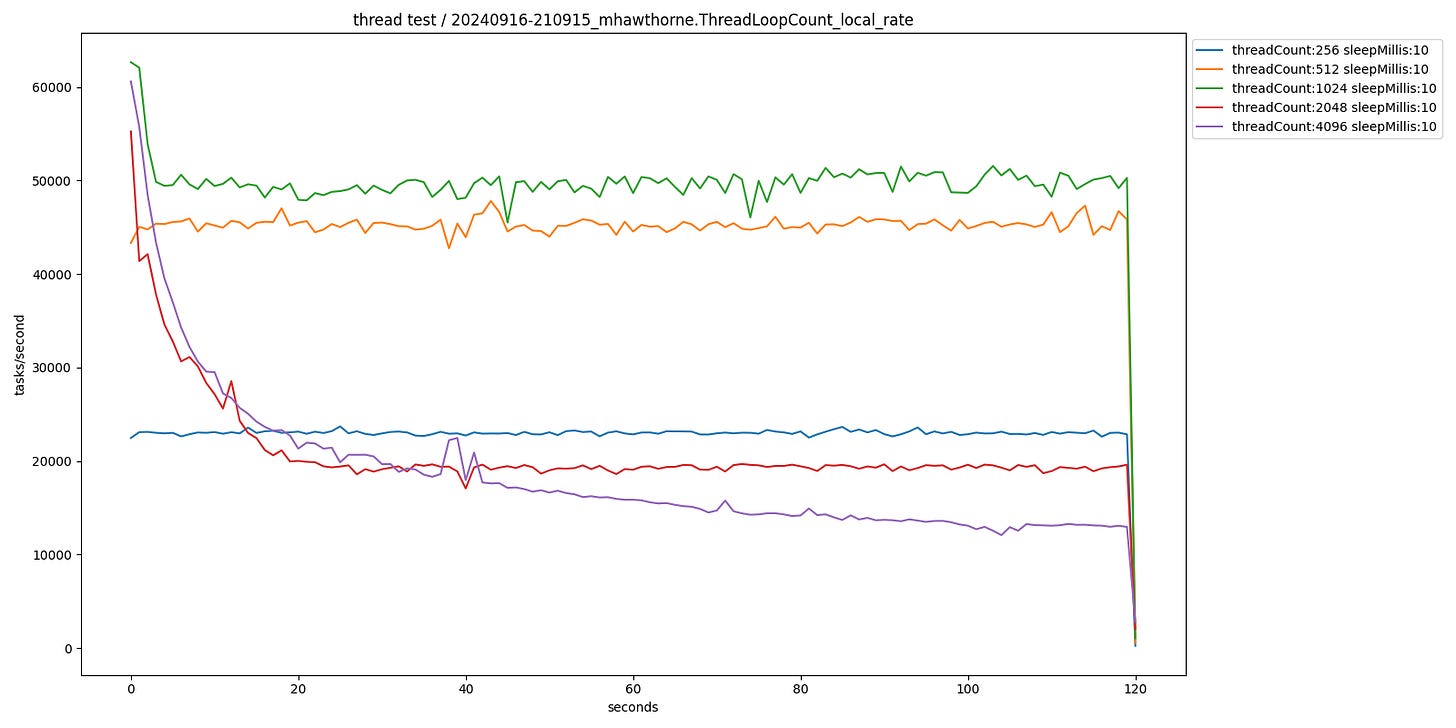

Below is a graph of tasks completed per second, for each of the thread counts. Note that these tests were completely independent - all run sequentially, not concurrently.

The shape of this was similar to what I expected. Runs with a thread count higher than 1024 saw decreasing throughput as the test ran.

So for the purpose of this very course test, 1024 is our ideal number of threads.

“Decreasing throughput” is an understatement, if we look at a graph of total cumulative tasks completed:

We can see that the 1024-thread test completes over twice as many tasks (5984505) as the 2048-thread test (2531106).

At around the 60-second mark, even the 256-thread test passes both the 2048-thread and 4096-thread tests in cumulative tasks completed.

We achieved better throughput with 10x fewer threads — imagine that.

Learn how to build high-volume systems, thrive through sociotechnical challenges, and survive gruesome production incidents.

Conclusions, Caveats, and Further Research

This was not a perfectly pure test, nor was it intended to be.

Let’s revisit my goals:

1 - Verify that CPU count is more impactful on system throughput than thread count

I didn’t hit this point exactly in the initial test. But I did confirm that there are diminishing returns once you increase your thread-to-CPU ratio beyond a threshold.

2 - Suggest an ideal Thread-to-CPU ratio

For this test, the number seems to be somewhere between 64 and 128. My intuition says it is closer to 64.

For reference:

64 = 1024 threads / 16 cores

recall that the 1024-thread test performed best

128 = 2048 / 16 cores

We know that 128 is too high since the performance of the 2048-thread test was significantly worse than the 1024-thread test. But we didn’t test for exactly where the boundary was.

I did some additional testing with variable CPU counts in Docker, but that’s too much to fit into this post. Look for that one in the near future. Until then, thanks for reading, and have a wonderful day.